Supporting Expert Decision-Making Under Uncertainty

Designing a three-stage AI workflow where the human stays in control, and the system earns trust incrementally

Framing Note

This case study describes work where the main result wasn't a finished feature, but clearer strategy, safer decisions, and better alignment across teams in a complex AI setting. The impact was in how we made decisions, not just what we built.

Context

Legal decision-making is rarely linear. It involves judgment, exceptions, and accountability, often under time pressure with real consequences.

The work started as an exploration of how AI could support expert legal review in high-risk workflows, specifically helping legal teams build, evaluate, and refine templates used to analyse contracts at scale. The problem kept changing. Technical limits were still being discovered. Cost and performance issues weren't settled. Leadership expectations shifted as we worked.

No set plan, no clear definition of done, no promise that our first idea would last through development.

As Staff Product Designer, I was responsible for helping the team handle this uncertainty without rushing into quick fixes.

The Problem We Were Really Solving

On the surface, the work appeared to be about improving an existing AI-assisted review workflow. The real problem was deeper:

- How do we introduce AI into expert workflows without undermining trust?

- When does assistance become risk rather than value?

- How do we define correctness in a domain shaped by nuance and exception?

- How do we give leadership confidence to move forward responsibly?

Without clear direction, we risked optimising for visible progress instead of choices we could defend.

Speed wasn't the challenge. Making the important tradeoffs clear to everyone was.

There was also a signal I kept coming back to: if a feature requires someone to train you before you can use it, it isn't ready to ship as a product feature. The product was only months old. The competition was watching. The risk of shipping something that needed hand-holding wasn't just a UX problem. It was a reputation problem.

You can be the premium product or the fast one. Not both.

My Role

As Staff Product Designer, I shaped both the design direction and how we made decisions about it.

- Setting the design vision for how AI should support expert judgment

- Facilitating alignment between product, engineering, and legal stakeholders

- Translating abstract risk into concrete product and design decisions

- Guiding leadership conversations about when to proceed, pause, or reframe

A lot of the work happened before we touched UI. How to frame the problem. Where to start in a three-stage workflow. How to guide without overwhelming. What to show and when.

What Made This Work Hard

The team didn't share a single way of thinking about the problem. Different disciplines optimised for different outcomes:

Product

Balanced momentum and delivery expectations

Engineering

Needed clarity around feasibility, cost, and performance

Legal experts

Prioritised defensibility, edge cases, and accountability

Design

In the middle of all of these, translating between them

Sometimes progress in one area created problems in another. The ambiguity wasn't a failure of collaboration. It's what working in a space with no clear answers actually feels like.

My job wasn't to remove that friction, but to help turn it into something useful.

The Three-Stage Workflow

The core design challenge was a three-stage AI workflow where human control had to be maintained at every stage, and where the AI's authority increased only as trust was earned.

Stage 1: Human sets precedent

The lawyer goes first. No AI yet. They review contracts and establish the baseline, setting the standard the AI will learn from. This grounds the system in expert judgment before any automation touches the work.

Stage 2: AI learns, human overrides

The AI applies what it learned from stage one. The lawyer reviews its responses, accepts or overrides, and the AI refines based on their feedback. Human in control throughout. The AI assists, it doesn't decide.

Stage 3: AI judge evaluates against rubric

The most automated stage, and only reachable because stages one and two built the foundation. The AI evaluates responses against a rubric established by the lawyer. The lawyer can still intervene. The rubric is always visible.

The design challenge across all three stages was the same: where do you start, how do you guide without overwhelming, and how do you surface the right information at the right time to maintain trust and accuracy.

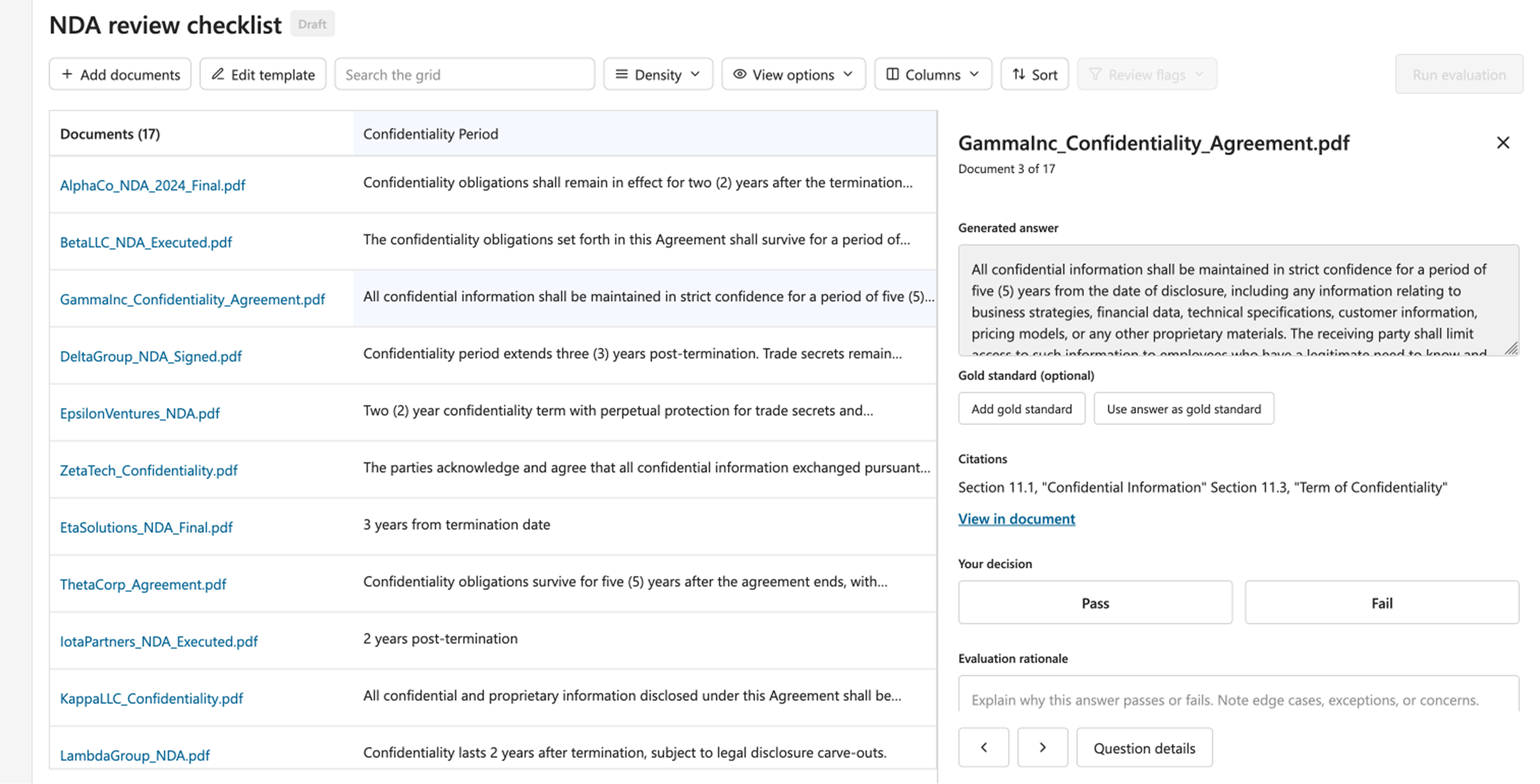

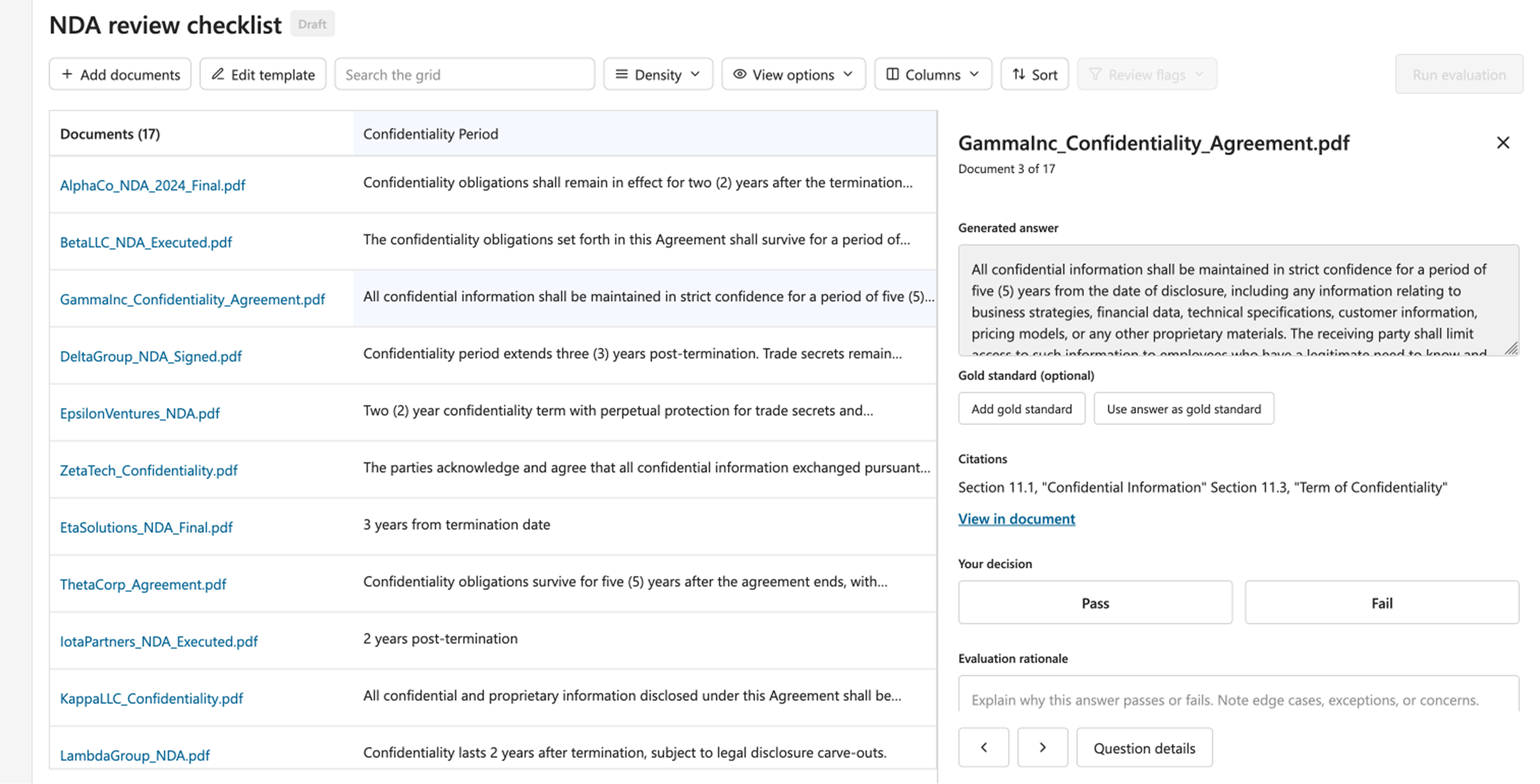

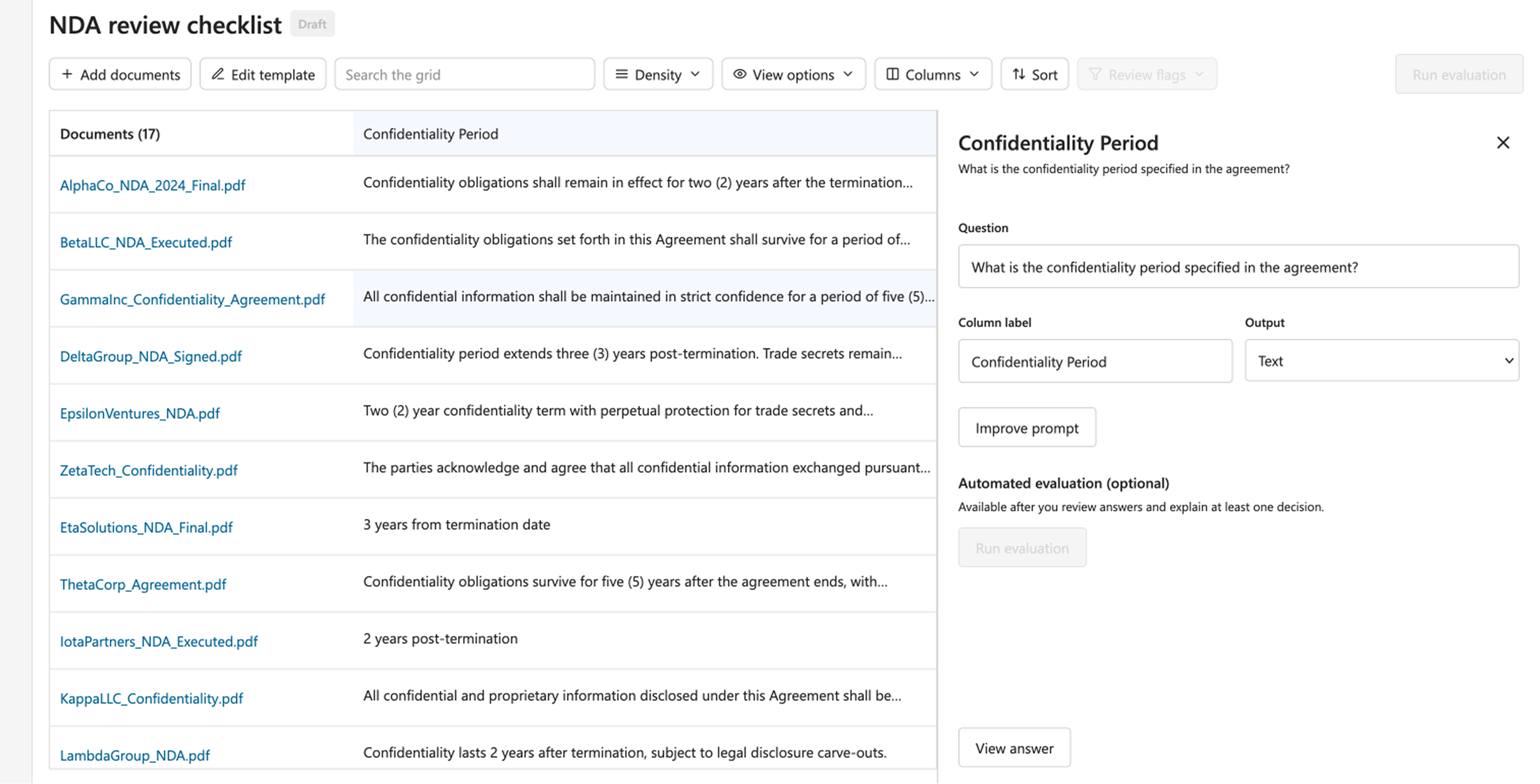

Grid and Panel: Source of Truth and Focus

The central design decision was how to structure the review interface across all three stages. The approach I used was a grid as the source of truth and a panel for focused action, the same principle I've applied across other legal AI work.

The grid gave lawyers orientation: where am I in this evaluation, what's been reviewed, what still needs attention, where are the gaps. Birds-eye view, always available.

When they clicked on a cell, an answer, a finding, a flagged item, a panel opened on the right. Single-focused. One answer at a time, with the relevant contract excerpt alongside it. The lawyer could work through the evaluation answer by answer without losing sight of where they were in the full set.

From the panel, they could toggle to the underlying question and prompt, the rubric the AI was working from. This was a transparency mechanism: the lawyer could always see why the AI gave the answer it did, not just what it said. That visibility is what makes an AI output auditable rather than opaque.

The panel did the teaching the trainer would otherwise have to do. That's what made it ready to ship.

Tensions We Had to Resolve

Instead of pushing everyone toward one answer, I helped the team name the tensions shaping our choices. These became a shared framework for evaluating ideas as the direction kept shifting.

Speed vs. Defensibility

Moving quickly mattered, but only if outcomes could withstand legal scrutiny.

AI Confidence vs. Legal Uncertainty

AI systems sound confident. Legal work often requires acknowledging what's unknown.

Automation vs. Accountability

Assistance should reduce effort, not shift responsibility away from experts.

Centralised Correctness vs. Contextual Judgment

Legal interpretation depends on context. A single global answer is often misleading.

Naming these tensions moved conversations from arguing about features to talking about strategic choices. That shift mattered. It gave the team a way to evaluate new ideas without relitigating first principles every time.

Principles That Shaped Direction

From these discussions, I helped the team agree on principles to guide decisions as things changed.

Separate AI output from human judgment

AI could inform decisions, but authority remained with experts. The lawyer always had the final word.

Make uncertainty visible

Gaps, exceptions, and incomplete coverage needed to be explicit, not hidden behind a confidence score.

Gate automation behind evidence

Suggestions should appear only once sufficient human-reviewed context exists. Stage three only becomes available after stages one and two have built the foundation.

Design for intervention, not autopilot

The system should invite expert involvement at the moments that matter most, not minimise it.

These principles shaped not just this project but how other teams at Litera approached AI work. They became reference points in later AI discussions even as this project evolved.

Key Contributions

My main contribution was helping the team make better decisions, not just creating deliverables.

- Defined the three-stage workflow structure that kept humans in control at every phase

- Designed the grid and panel architecture that replaced nested modals with a single-plane, focused review experience

- Added the prompt visibility toggle, making the AI's reasoning auditable rather than opaque

- Established thresholds that signalled when expert intervention was required

- Helped leadership identify where automation increased risk instead of reducing effort

- Reframed AI assistance as decision support, not decision authority

What Changed Because of This Work

The way the team talked about the work changed.

Before

"How do we surface answers faster?"

After

"When is it safe to surface anything at all?"

Before

"Can the AI handle this?"

After

"What does the user need to decide responsibly?"

Before

"How do we build this?"

After

"Should this exist at all, and if so, under what conditions?"

- Product decisions focused more on risk and building trust

- Engineering got clearer guidelines for when AI should step in

- Leaders felt more confident changing or pausing work that carried too much risk

- The principles developed here became reference points for related AI efforts across the company

Reflection

Not all impact is visible in the final UI.

The most valuable thing I did on this project was uncover the risk nobody had named yet. A workflow that requires training to use isn't ready to ship. A product only months old, in a competitive market, can't afford to ship something that needs hand-holding. Making that visible, early, clearly, and in terms the team could act on, is what moved the work forward.

The grid and panel architecture came from the same instinct. Nested modals stack complexity and hide context. A single-plane layout with a focused panel does the teaching that a trainer would otherwise have to do. That's the difference between a feature that needs explaining and one that explains itself.

I also learned something about timing. In complex AI projects, rushing to build something visible can create more problems than it solves. The work that mattered most here happened before any UI existed: naming the tensions, establishing the principles, getting the team to agree on what responsible progress looked like.

Several of those principles carried forward into other AI work at Litera. That clarity still matters as the product evolves.

You can be the premium product or the fast one. Striving for both is how you end up with neither.